2023 was an unprecedented year of AI innovation. At the application layer, we have seen a surge of vertical AI startups: new vertical application software players with LLM-native core products. Vertical AI is reinventing workflows across several end markets: finance, legal, procurement, healthcare, AEC, manufacturing and several others.

The rapid pace of innovation and growing end market interest is leading many to believe 2024 might just be the breakout year for Vertical AI: making the critical transition from experimental pilots to mission critical products.

While there is tremendous venture excitement about the emergence of vertical AI (highlighted by several venture posts over the past months), we have to acknowledge critical questions still remain to bridge vertical AI from transformational products to enduring businesses.

- How will standouts create a long-term asymmetric advantage on product and technical capabilities?

- How will vertical AI startups stack up vs incumbents also adopting LLMs?

- Which vertical AI players will adapt best to a shifting AI landscape?

So what should we expect from Vertical AI in 2024? To answer that question, we will explore the historical impact of AI in vertical workflows, where we are today with LLMs, and what can we expect from the future.

Quick navigation

I. The shortcomings of traditional AI in vertical applications

II. Why LLMs are supercharging the application layer

III. Vertical-specific workflows are primed to be transformed by LLM applications (market map)

IV. Vertical AI builder considerations

V. Looking ahead: Technical innovations to catalyze vertical AI in 2024 and beyond

VI. Determinants of a breakout year in Vertical AI

I. The shortcomings of traditional AI in vertical applications

Historically, traditional (predictive) AI struggled to realize an “intelligent layer” within vertical software.

Prior AI efforts in vertical applications were challenged for several reasons:

- Data infrastructure challenges

- Large internal data collection and integration requirements: Traditional predictive models mandated high-quality structured datasets for training, limiting usage to enterprises capable of resource-intensive data collection and pre-processing. Furthermore, integration into existing IT stacks required heavy customization, preventing compatibility across the full organization.

- Data silos: Data was fragmented across organizations leading to less effective model outputs and limited potential for cross-learnings across external and internal AI application efforts.

- Model architecture challenges

- Limitations of rules-based architecture and transfer learning: Rules-based systems made traditional AI too rigid to properly adapt to new and dynamically-changing use case environments, often requiring significant retraining each time. For example, traditional AI systems trained on motorcycle images may require extensive retraining to recognize car images.

- Limitations processing unstructured data: Traditional AI models required highly structured data often in predefined categories, often limiting the use cases the technology could impact.

- Natural language processing and contextual understanding shortfalls: Prior AI models lacked sophisticated natural language processing and context, often relying on highly programmed queries. This limited the potential for a seamless user experience: a critical tenant of vertical applications and catalyst for rapid user adoption.

II. Why LLMs are supercharging the application layer

Fundamentally, the previous efforts around AI applications needed an infrastructure, data, and AI model architecture renaissance: enter Large Language Models (LLMs).

The advent of LLMs not only solved the critical data infrastructure and resource challenges of traditional AI but also introduced new transformer model properties and training techniques, unlocking new potential for AI at the application layer.

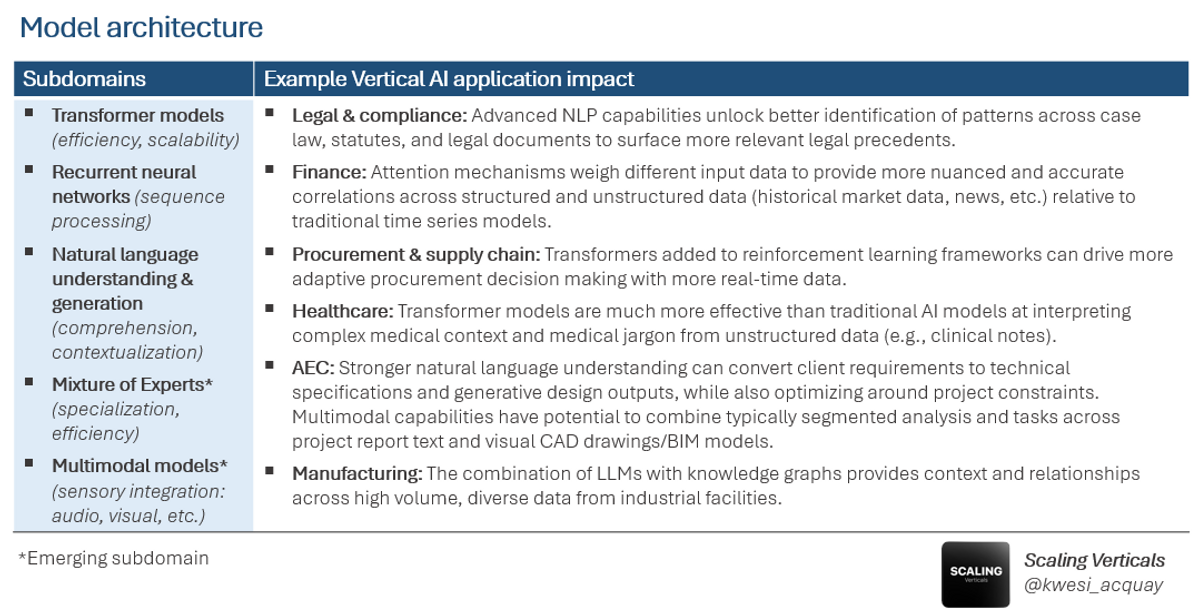

- Transformer Model Architecture: transformer models process sequential data much better than traditional AI methods. Self-attention mechanisms assign unique weights to input data differently, driving better context and more relevant outputs.

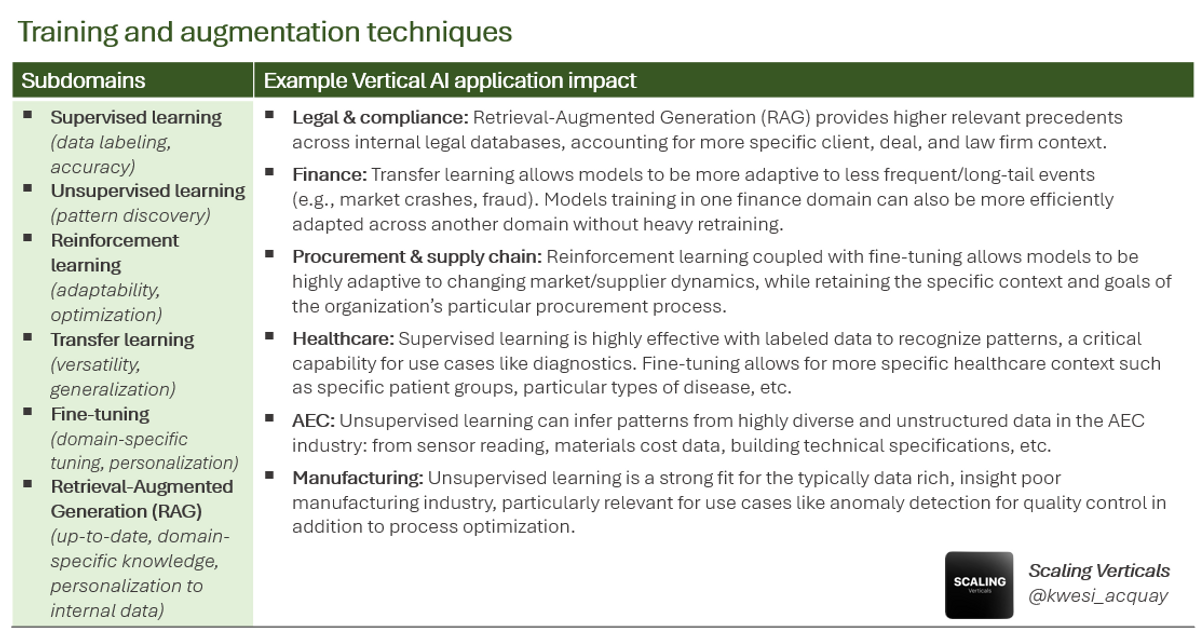

- Training and Augmentation Techniques (Supervised, Unsupervised Learning, RLHF, Transfer Learning, RAG): Several training techniques allow LLMs to capture data better across labeled data sets (supervised learning) and unlabeled datasets (unsupervised learning). Reinforcement Learning from Human Feedback (RLHF) facilitates continuous learning from human responses adding greater context in AI generated answers. Transfer learning capabilities offer a much more adaptable model that can apply knowledge and insights from one relevant domain problem to another without extensive retraining. Advanced learning techniques (fine-tuning, RAG, self-learning) allows for continuous improvement and adaption to new data and use cases with limited human interaction.

- Advanced Natural Language Understanding: LLMs have strong natural language capabilities to interpret deeper context and nuance around human-like text allowing for much stronger response outputs, resulting in greatly improved vertical AI user experience.

These LLM properties provide unique building blocks for new applications to improve distinct vertical use cases. The below tables outlines how these differentiated technical capabilities translate to industry use cases.

Note: Subdomain tables adapted from “From Google Gemini to OpenAI Q* (Q-Star): A Survey of Reshaping the Generative Artificial Intelligence (AI) Research Landscape” (McIntosh, Susnjak, Liu, Watters, Halgamuge)

III. Vertical-specific workflows are primed to be transformed by LLM applications

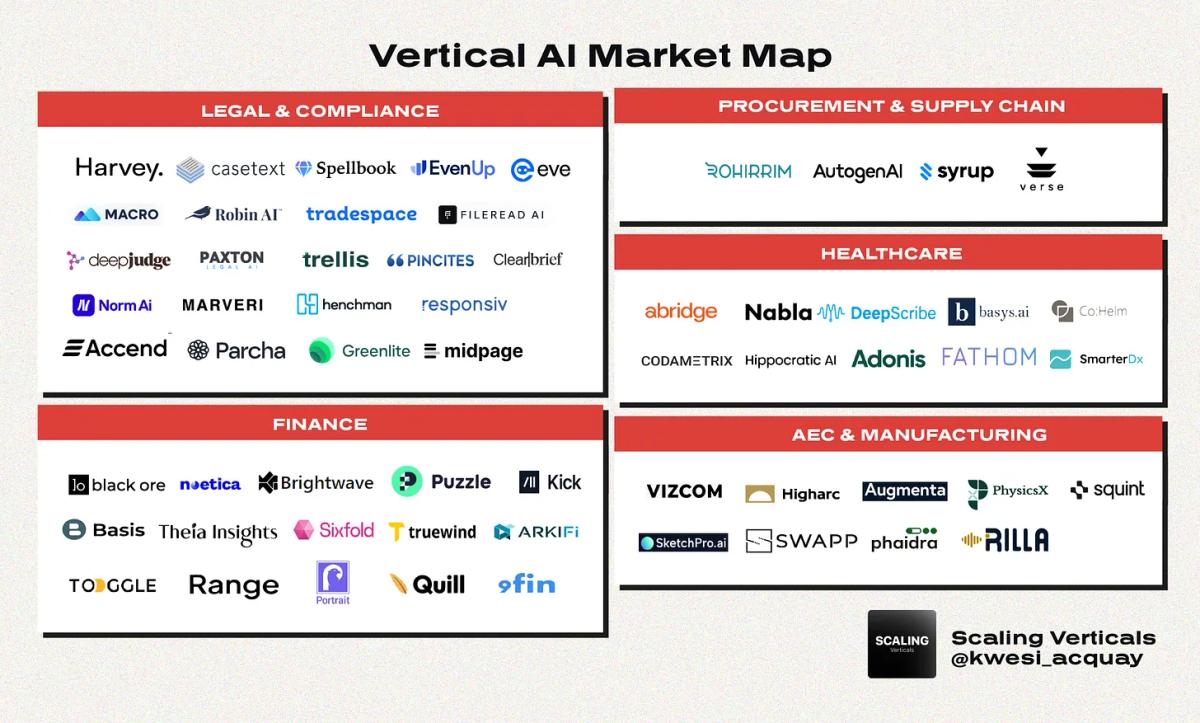

Vertical AI founders are innovating across several industry use cases and end markets.

- Legal & compliance: Harvey, Casetext, Spellbook, and Eve are reinventing research, drafting, and negotiating workflows across litigation and transactional use cases for Big Law and small/mid-market law firms. EvenUp provides unique business leverage to personal injury law firms automating demand letters: driving efficiency and improved settlement outcomes. Macro is leveraging LLMs to transform document workflow and collaborative redlining. Norm AI is tackling regulatory compliance with AI agents.

- Finance: Noetica and 9fin are adding much needed innovation to private credit and debt capital markets transactions. Brightwave is leveraging LLMs for investment professional workflows. Black Ore’s Tax Autopilot is automating tax compliance for CPAs and tax firms.

- Procurement & supply chain: Rohirrim and Autogen AI are automating the RFP bid writing process, leveraging LLMs for draft ideation and extraction of supporting company statistics and case studies for detailed RFP technical responses. Syrup is helping retail brands with more sophisticating demand forecasting for inventory optimization.

- Healthcare: Abridge, DeepScribe, Nabla, and Ambience are among a growing list of medical scribes leveraging AI speech recognition to automate real-time documentation of clinician-patient conversations.

- AEC & commercial contractors: Higharc and Augmenta are incorporating LLMs for generative design across homebuilding and commercial buildings. Rillavoiceprovides speech analytics for commercial contractor sales reps across home improvement, HVAC, and plumbing.

- Manufacturing: Squint leverages both Augmented Reality and AI to create a novel approach to industrial process documentation. PhysicsX is transforming physics simulation and engineering optimization for the automotive and aerospace sectors.

Note, this market map is a non-exhaustive list and will continue to be refined throughout the year. If you have any additions, I would love to hear from you at scalingverticals@substack.com or kacquay@redpoint.com.

IV. Vertical AI builder considerations

What should founders consider as they build in vertical AI? Below are a few learnings from spending time in the ecosystem over the last year.

Vertical AI product development is different than classic software development

- LLM’s non-deterministic nature adds additional complexity to the software development and testing process that traditionally relied on an expected behavior. Product teams will need to implement more qualitative judgements and industry professional expertise to ensure outputs meet expectations as they balance the need for sufficient, consistent LLM output with the desire to expand LLM use cases and product extensions.

LLM technique tradeoffs vary by industry

- Each industry has unique challenges that impact LLM technique tradeoffs. For example, several finance AI startups have to balance handling the complexity of tabular data while maintaining high accuracy. Legal AI LLMs must handle large volume of legal text without losing the ability to pick up nuanced interpretation of case law and specific contract documentation.

Technical differentiation should translate to product differentiation in eyes of the end user

- The recent proliferation of vertical AI tools has been a double edge sword: one on hand, the surge in products has driven high end-market interest; on the other, too many AI tools can make it challenging for the end customer to see clear differentiation between new products. Vertical AI founders and sales teams will need to show not just tell: tie your product’s technical differentiation to clear product differentiation in elevated user experience and ROI.

Go-to-market choices impact your product feedback loops

- There are natural sales cycle tradeoffs between selling to enterprise and mid-market/SMB but there are also tradeoffs on product feedback loops. Given a “standout vertical AI product” is often assessed by human judgement, it’s imperative to get product feedback from as many industry professionals as possible. Vertical AI players selling to enterprise will need to work closely with innovation teams to get recurring product feedback from the initial testing group of professionals. Separately, Vertical AI players also selling to mid-market/SMB should leverage the opportunity to have more direct feedback to accelerate product development efforts.

Cross-industry product extensions can fuel platform potential and TAM upside

- When assessing long-term extensibility of your platform, consider unique product wedges that can add value cross-industry. For example, a vertical AI product for M&A lawyers may also have product extensibility to also add value to bankers and credit investors who may be key stakeholders within the same transaction.

Play offense with the shifting AI infrastructure landscape

- Your asymmetric technical advantage today won’t necessarily be your asymmetric technical advantage tomorrow. Vertical AI application founders should proactively consider how new AI infrastructure shifts can benefit competitive advantage (instead of solely looking at shifts as a threat to defensibility). Founders should “look forward and reason back” : understand where AI innovation might be headed and what that means for your product and competitive strategy. For example, if you believe autonomous AI agents are on the horizon in your sector, how does that inform your near-term product roadmap? How will you build a more differentiated product and drive more ROI for customers?

V. Looking ahead: LLM innovations to catalyze vertical AI in 2024 and beyond

AI innovation isn’t slowing down anytime soon, creating opportunity for vertical AI players.

- Multimodal innovation: Integrating text, image, audio, and visual into one AI framework. Cross-modal attention mechanisms can provide more nuanced context in real-time across different data types (e.g. interpreting x-ray images alongside clinical note text to improve medical diagnostics).

- Multi-agent systems: Vertical AI use case productivity can greatly expand with specialized AI agents that work together to automatically carry industry-specific tasks and self-learn to optimize process without humans involved.

- Moving beyond transformer models: New architectures such as Hyena, could potentially provide a viable alternative to transformer models in the future. Its distinct data processing properties (long convolutions instead of transformers’ attention mechanism) and algorithmic efficiency (subquadratic time complexity vs transformers’ quadratic operation) could add faster processing, compute efficiency, and greater model context to several vertical AI use cases. Additional architectures like Mamba and RWKV will continue to push the boundaries of model capability and scalability.

VI. Determinants to a breakout year in Vertical AI

So what may we need to believe for a breakout 2024 in Vertical AI? Below are near term catalysts we hope fuel an exciting year in Vertical AI:

- End market adoption velocity hits key inflection points: from pilots to production with multiple users across the organization.

- Founders master an emerging vertical AI product playbook to develop intelligent software that deeply integrates into core workflows.

- Vertical AI startups make an effective case vs incumbents adding LLMs.

- New Vertical AI sectors emerge (e.g. defense).

- Technical innovation pushes the Vertical AI product frontier even further.

Scaling Verticals Substack: What to expect

If you’ve gotten this far, thank you for reading. I’m excited to launch the new Scaling Verticals Substack and dive into an exciting year in vertical AI. A few things to expect this year:

- Vertical AI Field Notes: an interview series featuring founders, builders, end users, and researchers

- Vertical AI survey (soon to be announced collaboration with Stanford research)

If you’re a founder, builder, or researcher in the vertical AI space, I’d love to chat and find ways to collaborate. Feel free to email at scalingverticals@substack.com or kacquay@redpoint.com

Note: For purposes of this post, we define vertical AI startups as new vertical application software players with LLM-native core products. We recognize this definition has some grey area and may converge with how we view “vertical software” over time. Note we exclude incumbent players embedding LLM workflows to long-established products in addition to Vertical LLM infrastructure providers.